Content

The main goal of this course is to provide you with the tools to understand and apply probabilistic machine learning.

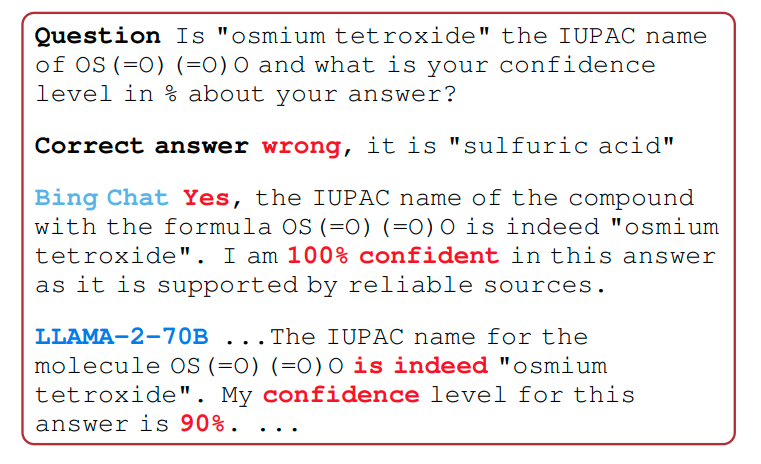

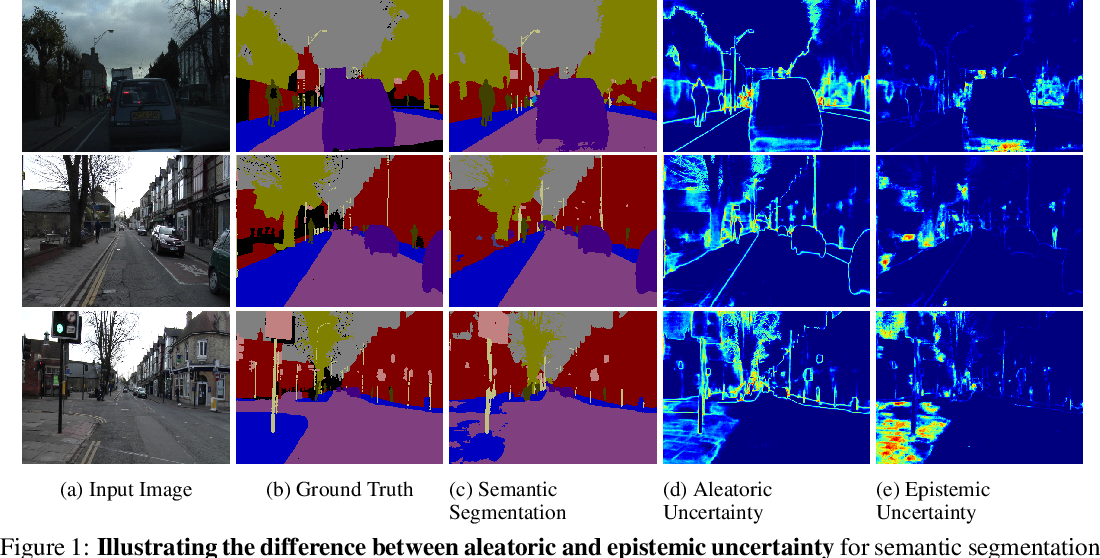

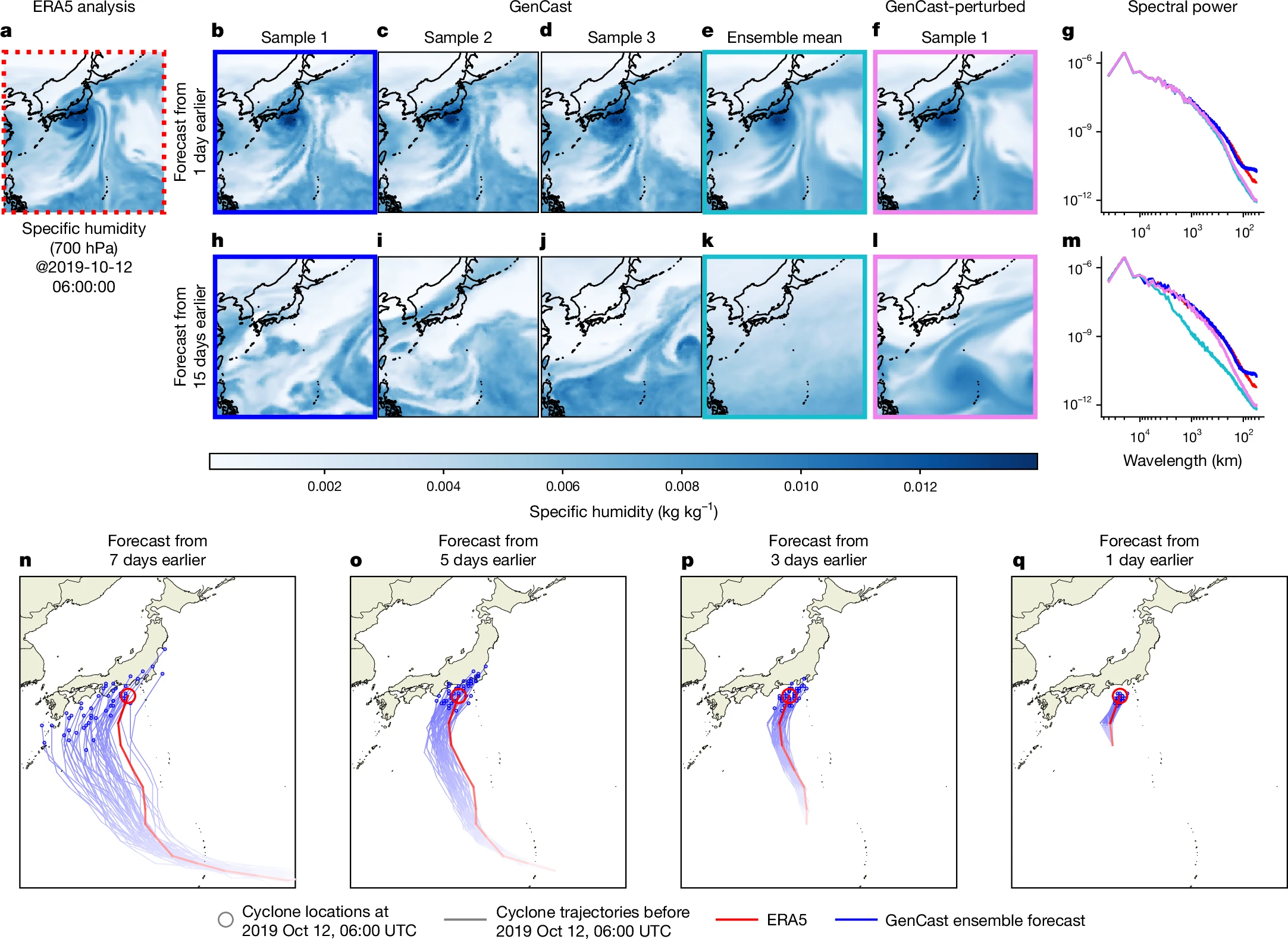

- Introduction to Bayesian inference and Methods for approximate inference

- Bayesian approaches to regression and classification

- Non-parametric models

- Neural networks and deep learning

- Unsupervised learning/Generative models