Large Scale 3D Scene Reconstruction based on Diffusion Priors and NVS-Oriented Explicit/Implicit Representations

Funding: Huawei France (CIFRE contract)

Investigator(s): Simone Rossi, Pietro Michiardi

Project timeframe: 2023 - 2025

Project description

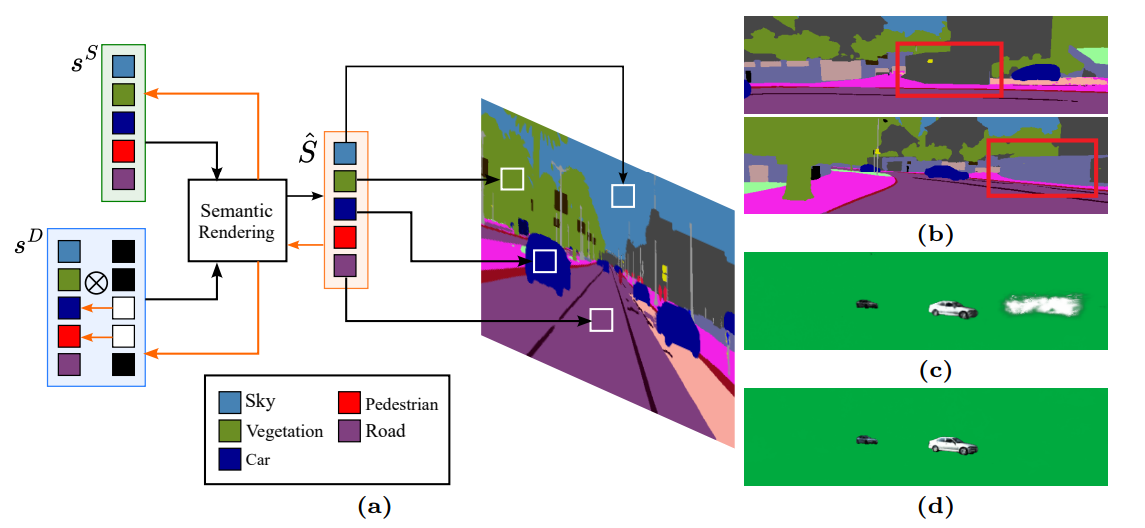

The main focus of this project is to widely reconstruct 3D scenes based on limited pose observations in order to generate realistic novel views in a machine-learning and diffusion-based simulator for self-driving vehicles. Implicit representations have known a great success in the last couple of years thanks to the introduction of Neural Radiance Fields (NeRF), enabling high fidelity implicit modeling of a specific scenes based solely on calibrated image sensors and their poses in the world frame. NeRFs or similar implicit representation are ideal candidates for self-driving realistic simulators enabling a large number of possibilities in order to improve multiple downstream tasks (e.g., localization and perception). Furthermore, the recent introduction of 3D Gaussian Splats (3DGS) as an explicit alternative representation to NeRF, have enabled fast rendering speed with limited quality degradation.

In this project, we propose to investigate various few-shots techniques to understand the core semantic and geometric features of the scene to model background and foreground objects individually which enables photorealistic rendering, even at unseen and occluded parts of the scene. By employing large 3D diffusion models and regularization techniques our goal is to complete the geometry of both foreground objects and the background scene in dynamic scenarios with few observations and occlusions. The development of new algorithms for in-deep and in-details urban scene understanding will be conditioned to be as scalable as possible, both in term of spatial extant of the scene to be described and regarding the computational resources needed to analyze the scene and to generate the artificial data. Additionally, they will need to inherently handle uncertainties in reconstructing scenes from noisy unstructured data.